Demystifying Pressure: Exploring the Types of Pressure References

Welcome to the world of pressure! Have you ever wondered about the force that pushes, compresses, and shapes our surroundings? Pressure is a fundamental concept that influences everything from weather patterns to tire performance. In this guide, we'll unravel the mysteries of pressure, explore different types of pressure measurements, and discover the practical applications that rely on this fascinating physical quantity. Get ready to dive into the world of pressure and uncover its significance in our everyday lives!

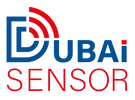

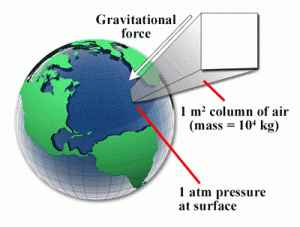

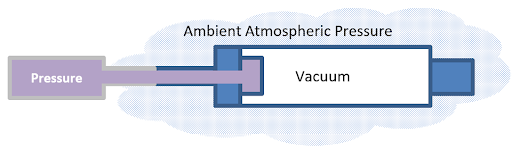

Fig 1. Different pressure references

Pressure Reference

Pressure measurement is a fundamental aspect of various industries and scientific disciplines, where understanding the reference point is crucial for accuracy and consistency. The choice of a reference pressure serves as the cornerstone for pressure measurements, allowing us to gauge the magnitude of pressure differences and the behavior of gases and fluids in diverse environments. This discussion delves into the significance of reference pressure, exploring key reference points such as atmospheric pressure, perfect vacuum, and sealed pressure, each with its unique role in ensuring precise and reliable pressure measurements. Understanding these reference points is essential for engineers, scientists, and professionals in fields where pressure plays a critical role.

Atmospheric Pressure

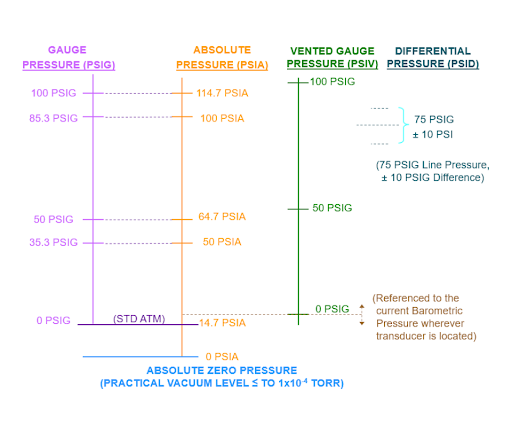

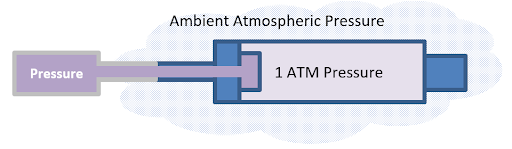

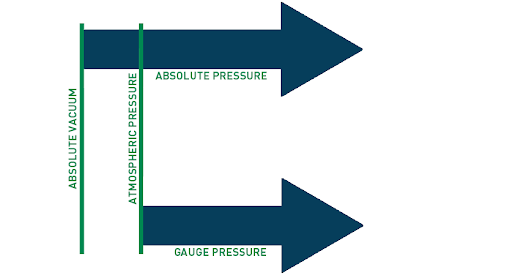

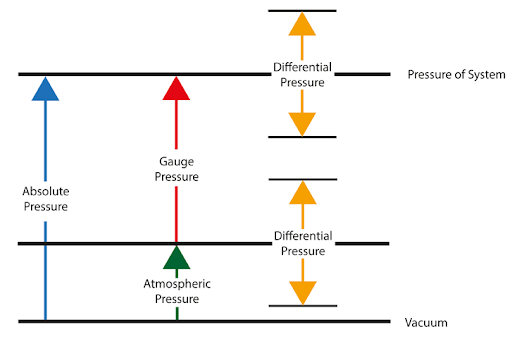

Atmospheric pressure is the force exerted by the Earth's atmosphere on a given area. It serves as a common reference point for pressure measurements because it represents the pressure at a specific location and altitude. Gauge pressure is measured relative to atmospheric pressure. For example, if a gauge pressure sensor reads 30 PSIG, it means the pressure is 30 psi above atmospheric pressure.

Perfect Vacuum

A perfect vacuum is a theoretical state with zero pressure. It represents the complete absence of gas molecules, and thus, zero pressure. Absolute pressure is measured in relation to a perfect vacuum. It includes both the pressure of the substance being measured and the atmospheric pressure, resulting in a reference point at absolute zero pressure.

Sealed Pressure (Zero Pressure)

Sealed pressure is a reference point where the pressure is sealed off from the substance being measured, and it is maintained at zero pressure. This reference point is often used in applications where maintaining a constant reference pressure is crucial, regardless of changes in atmospheric pressure. Pressure measured related to sealed pressure is a type of gauge pressure. It represents the pressure difference between the sealed reference and the substance being measured. This is distinct from vented gauge pressure, which uses atmospheric pressure as the reference point.

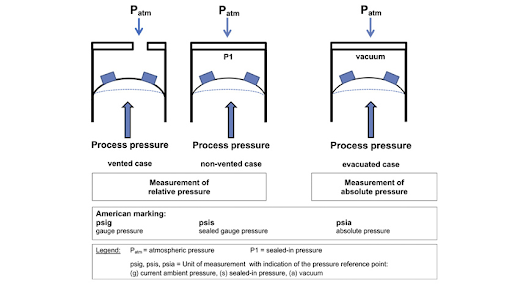

Fig 2. Gauge (Gage) Pressure

The choice of a pressure reference depends on the specific application and the requirements of the measurement system. It is essential to establish a consistent and accurate reference point to ensure meaningful and reliable pressure measurements.

Browse our wide selection of Pressure Sensor to find the perfect one for your application.

Comparing table

Here's a table comparing the different types of pressure references:

| Pressure Reference | Explanation | Example Use Cases |

| Atmospheric Pressure | The pressure exerted by the Earth's atmosphere at a specific location and altitude. | Meteorology, Aviation, Industrial Processes |

| Perfect Vacuum | A theoretical state with zero pressure, representing the complete absence of gas molecules. | Vacuum systems, pressurized containers, Astronomy |

| Sealed Pressure | A reference point where the pressure is sealed off from the substance being measured, maintained at zero pressure. | Laboratory Research, Calibration, Pressure Testing |

Table 1. Comparing types in a table

Please note that this table provides a general overview of the different pressure references and their typical use cases. There may be additional nuances and applications for each type of pressure reference depending on specific industries or contexts.

How can you measure the Atmospheric Pressure?

Atmospheric pressure can be measured using various instruments. Here are three common methods for measuring atmospheric pressure:

- Mercury Barometer: A mercury barometer is a traditional instrument used to measure atmospheric pressure. It consists of a glass tube filled with mercury, inverted into a dish of mercury. The pressure exerted by the atmosphere pushes the mercury up the tube, creating a column of mercury. The height of the mercury column is a measure of the atmospheric pressure. The standard atmospheric pressure at sea level can be calculated as the height of the mercury column, typically measured in millimeters of mercury (mmHg) or inches of mercury (inHg).

- Aneroid Barometer: An aneroid barometer is a mechanical device that uses a flexible metal chamber, typically made of a metal alloy called phosphor bronze, which expands or contracts with changes in atmospheric pressure. The expansion or contraction of the chamber is then translated into a mechanical movement that can be displayed on a dial. Aneroid barometers are often more compact and portable compared to mercury barometers, making them suitable for various applications.

- Digital Barometer: Digital barometers use electronic sensors to measure atmospheric pressure. These sensors may include strain gauge sensors, piezoelectric sensors, or capacitive sensors. The sensors detect the pressure changes and convert them into electrical signals, which are then processed and displayed as digital readings on a screen. Digital barometers are convenient for quick and accurate pressure measurements and are often used in weather stations, aviation, and personal weather devices.

It's important to note that when using any barometer, calibration, and adjustments may be necessary to ensure accurate readings. Additionally, altitude can affect atmospheric pressure, so measurements taken at different elevations will yield varying results. Reference tables or algorithms are used to compensate for altitude differences when comparing pressure readings at different locations.

Overall, these methods provide means to measure atmospheric pressure accurately and are widely used in weather monitoring, aviation, research, and various industrial applications.

Fig 3. Vented or True Gauge (Gage)

What is Atmospheric Pressure used for?

Atmospheric pressure has various practical uses across different fields and industries. Here are some key applications of atmospheric pressure:

- Weather Forecasting: Atmospheric pressure is a fundamental parameter used in weather forecasting. Changes in atmospheric pressure can indicate the approach of weather systems such as high-pressure or low-pressure systems, which can affect weather patterns. Monitoring and analyzing atmospheric pressure data helps meteorologists predict and understand weather conditions, including the formation of storms, changes in air masses, and the development of weather fronts.

- Altitude Measurement: Atmospheric pressure decreases with increasing altitude. Therefore, atmospheric pressure can be used as a basis for determining elevation or altitude. Barometric altimeters, which rely on changes in atmospheric pressure, are commonly used in aviation and outdoor activities like hiking and mountaineering to estimate altitude.

- Barometric Pressure Compensation: Atmospheric pressure is used to compensate for barometric pressure variations in different environments. For instance, in pressure-sensitive instruments and equipment, such as barometers, pressure sensors, and altimeters, atmospheric pressure is used as a reference point to compensate for changes in ambient pressure, ensuring accurate measurements or readings.

- Aviation: Atmospheric pressure is crucial in aviation for various purposes. It is used in the calculation of aerodynamic properties, aircraft performance, and flight planning. Atmospheric pressure data is used to determine standard atmospheric conditions for altitude and airspeed calculations. Additionally, the pressure altimeter in aircraft relies on atmospheric pressure to provide accurate altitude readings.

- Industrial Processes: Atmospheric pressure is taken into account in various industrial processes. For example, in chemical manufacturing, pressure differentials and variations in atmospheric pressure are considered in the design and operation of equipment like distillation columns, reactors, and vacuum systems. Understanding and controlling atmospheric pressure is crucial for maintaining process efficiency and safety.

- Human Health and Physiology: Atmospheric pressure affects human health and physiology. Changes in atmospheric pressure, such as during ascent or descent in aircraft or scuba diving, can impact the body, particularly the ears and sinuses. Monitoring atmospheric pressure can help individuals with certain medical conditions manage symptoms associated with changes in atmospheric pressure.

- HVAC Systems: Atmospheric pressure is used as a reference for designing and operating heating, ventilation, and air conditioning (HVAC) systems. It helps regulate airflow, manage air pressure differentials, and ensure proper ventilation in buildings. Pressure differentials and balance in HVAC systems contribute to energy efficiency, occupant comfort, and air quality.

These are just a few examples highlighting the importance of atmospheric pressure in diverse fields. Atmospheric pressure measurements and understanding its variations are essential for weather prediction, altitude determination, industrial processes, aviation, and numerous other practical applications.

Fig 4. Atmospheric pressure

Atmospheric Pressure Formula

The formula to calculate atmospheric pressure depends on the specific context and the units being used. However, one common formula used to estimate atmospheric pressure as a function of altitude is the barometric formula:

P = P₀ * (1 - (L * h) / T₀) ^ (g / (R * L))

Where:

P = Atmospheric pressure at a given altitude,

P₀ = Atmospheric pressure at a reference altitude (usually sea level),

L = Temperature lapse rate (rate of decrease of temperature with increasing altitude),

h = Altitude above the reference altitude,

T₀ = Temperature at the reference altitude,

g = Acceleration due to gravity,

and R = Ideal gas constant.

It's important to note that the barometric formula is an approximation and may not account for all factors influencing atmospheric pressure accurately. Additionally, the formula assumes a standard atmosphere with a constant lapse rate and is most applicable in the troposphere (lower atmosphere).

Different units may be used for pressure, altitude, and temperature depending on the context, such as pascals (Pa), millibars (mb), feet (ft), meters (m), Celsius (°C), or Kelvin (K). Therefore, it is crucial to ensure consistency in units when applying the formula.

For precise atmospheric pressure calculations or specific applications, specialized meteorological equations or models may be employed that incorporate additional factors such as humidity, temperature variations, and weather patterns.

Overall, the barometric formula provides a general approach to estimating atmospheric pressure as a function of altitude, although it may be subject to limitations and simplifications in real-world scenarios.

How can you measure the Absolute Pressure?

Measuring absolute pressure involves determining the pressure relative to a perfect vacuum or absolute zero pressure. Here are a few methods commonly used to measure absolute pressure:

- Absolute Pressure Sensor: Absolute pressure sensors, also known as absolute pressure transducers or transmitters, are electronic devices specifically designed to measure absolute pressure. These sensors typically utilize various technologies such as piezoresistive, capacitive, or piezoelectric mechanisms to detect pressure changes. They are calibrated to provide direct measurements of absolute pressure, referencing it to a perfect vacuum or absolute zero. Absolute pressure sensors are commonly used in industrial processes, scientific research, and instrumentation applications.

- Vacuum Gauge: Vacuum gauges are specialized instruments used to measure pressures below atmospheric pressure, which includes absolute pressure measurements. There are different types of vacuum gauges, such as Pirani gauges, ionization gauges, and capacitance manometers, that are capable of measuring pressures in the vacuum range. These gauges are calibrated to provide absolute pressure readings, typically in units such as pascals (Pa) or torr.

- Calibration Standards: Calibration standards, often traceable to national standards, can be used to measure absolute pressure indirectly. These standards consist of highly accurate reference instruments, such as deadweight testers or piston gauges, which are calibrated against known pressure references. By applying known forces or pressures to the standard, the absolute pressure of the tested system can be determined by comparison.

- Combined Measurement: In some cases, measuring absolute pressure may involve a combination of absolute pressure and atmospheric pressure measurements. By subtracting the atmospheric pressure from the total pressure reading (which includes atmospheric pressure), the absolute pressure can be obtained. This method requires a reliable and accurate measurement of atmospheric pressure, such as using a separate atmospheric pressure sensor or referring to local weather station data.

It's important to note that accurate measurement of absolute pressure often requires regular calibration and consideration of factors such as temperature, humidity, and environmental conditions. Additionally, selecting the appropriate measurement method depends on the specific application, desired accuracy, and range of pressures to be measured.

By employing these measurement techniques, engineers, scientists, and technicians can obtain accurate absolute pressure readings for various industrial, scientific, and research purposes.

Fig 5. Pressure scale

How can you use Absolute pressure as a pressure reference?

Absolute pressure can be used as a pressure reference by establishing it as a baseline or reference point for pressure measurements. Here's how absolute pressure can be utilized as a pressure reference:

- Calibration Standards: Absolute pressure can be used as a reference for calibrating pressure measurement instruments. Calibration standards, such as absolute pressure standards or deadweight testers, provide a known and stable absolute pressure. Instruments can be calibrated against these standards to ensure accuracy and traceability in pressure measurements. The absolute pressure standard serves as a reference point for calibration and comparison.

- Vacuum Calibration: Absolute pressure is used as a reference in vacuum calibration. Vacuum calibration is the process of calibrating instruments and sensors that measure pressures below atmospheric pressure, such as vacuum gauges or transducers. The calibration is performed relative to a known absolute pressure, typically a vacuum reference, to ensure accurate and reliable measurements in the vacuum range.

- Pressure Transducers and Sensors: Absolute pressure transducers and sensors are designed to directly measure pressure relative to absolute zero pressure or a perfect vacuum. These instruments can provide accurate absolute pressure readings and can be used as references in various applications. They are commonly employed in research, testing, and industrial processes where absolute pressure measurements are required.

- Scientific Experiments: Absolute pressure is often used as a reference in scientific experiments, particularly in physics, chemistry, and material science. Absolute pressure measurements allow researchers to establish a well-defined pressure baseline for their experiments. This is crucial for studying gas behaviors, investigating phase transitions, understanding material properties, and conducting controlled experiments under specific absolute pressure conditions.

By using absolute pressure as a reference, pressure measurements can be made relative to a known and consistent pressure baseline. This enables accurate comparisons, calibration, and traceability in various applications, ranging from scientific research and industrial processes to quality control and instrumentation.

Absolute zero pressure

Absolute zero pressure refers to the complete absence of pressure or the lowest possible pressure that can be achieved in a system. It represents a state of perfect vacuum where no gas molecules or particles are exerting any force or pressure.

In practical terms, achieving absolute zero pressure is nearly impossible as it would require the removal of all gas molecules and particles from a system, including the elimination of residual gases. However, the concept of absolute zero pressure is used as a theoretical reference point or baseline for pressure measurements.

In terms of temperature, absolute zero pressure corresponds to absolute zero temperature, which is the lowest possible temperature theoretically attainable. At absolute zero temperature, the thermal energy of a system is minimal, and all molecular motion ceases.

While absolute zero pressure cannot be practically achieved, it is still a valuable reference point in pressure measurements and thermodynamics. It serves as the basis for absolute pressure measurements and calculations, where pressures are referenced relative to this state of complete vacuum.

In pressure measurement systems, absolute pressure is often measured relative to absolute zero pressure, and the values are expressed as absolute pressure units, such as pascals (Pa), bars (bar), or torr. This allows for accurate pressure comparisons and calculations by accounting for the pressure relative to a vacuum state.

It's important to note that in real-world pressure measurements, absolute pressure is typically expressed relative to atmospheric pressure rather than absolute zero pressure, as atmospheric pressure serves as a more practical reference point for many applications.

Absolute pressure formula

The formula to calculate absolute pressure depends on the context and the units being used. However, here are a few common formulas for calculating absolute pressure:

- Absolute Pressure from Gauge Pressure: To convert gauge pressure to absolute pressure, you add the gauge pressure to the atmospheric pressure. The formula is

Absolute Pressure = Gauge Pressure + Atmospheric Pressure

Where: Absolute Pressure is the total pressure relative to a perfect vacuum or absolute zero pressure. Gauge Pressure is the pressure measured relative to atmospheric pressure. Atmospheric Pressure is the pressure exerted by the Earth's atmosphere at a specific location.

- Ideal Gas Law: The ideal gas law can be used to calculate the absolute pressure of an ideal gas based on its temperature, volume, and the number of moles of gas present. The formula is

Absolute Pressure = (n * R * T) / V

Where: Absolute Pressure is the pressure of the gas in absolute units (e.g., pascals). n is the number of moles of gas. R is the ideal gas constant. T is the temperature of the gas in Kelvin. V is the volume of the gas.

Other Formulas: Depending on the specific scenario and gas properties, additional formulas may be used to calculate absolute pressure. For example, in fluid mechanics, the Bernoulli equation can be employed to determine absolute pressure based on velocity, elevation, and other factors.

It's important to ensure that the units are consistent in the calculations. Additionally, in practical applications, other factors like humidity, non-ideal gas behavior, and compressibility effects may need to be considered for accurate pressure calculations.

When working with pressure calculations, it's recommended to refer to appropriate equations, standards, or guidelines specific to the application and consult with domain experts to ensure accurate and reliable results.

Fig 6. Absolute pressure

How can you measure the Gauge Pressure?

Gauge pressure refers to the pressure measured relative to atmospheric pressure. To measure gauge pressure, you can use various instruments and techniques. Here are a few common methods:

- Pressure Gauges: Pressure gauges are widely used instruments for measuring gauge pressure. They consist of a pressure-sensing element connected to a dial or digital display. The sensing element may be a Bourdon tube, diaphragm, or other mechanisms that respond to pressure changes. As the pressure increases or decreases, the sensing element undergoes deformation, which is then translated into a corresponding reading on the gauge's display. Pressure gauges are available in a range of types, including analog, digital, and differential pressure gauges, catering to different applications.

- Pressure Transducers: Pressure transducers, also known as pressure sensors or transmitters, are electronic devices used to measure pressure. They employ various technologies, such as strain gauges, piezoresistive elements, or capacitive sensors, to convert pressure into an electrical signal. The transducer outputs a proportional electrical signal, which can be displayed on a digital indicator or processed by a control system. Gauge pressure transducers are calibrated to provide readings relative to atmospheric pressure.

- Manometers: Manometers are simple devices that use a liquid column to measure pressure. They consist of a U-shaped tube partially filled with a liquid, such as water or mercury. One end of the tube is open to the pressure being measured, while the other end is open to the atmosphere. The difference in liquid level between the two arms of the U-tube is a measure of the gauge pressure. By calculating the height difference or using calibrated scales, the gauge pressure can be determined.

- Differential Pressure Gauges: Differential pressure gauges are specifically designed to measure the pressure difference between two points in a system. They consist of two pressure-sensing elements connected to a display or indicator. By comparing the pressure at two locations, the gauge can display the differential pressure or the pressure difference relative to atmospheric pressure.

It is crucial to select the appropriate measuring instrument based on the pressure range, accuracy requirements, environmental conditions, and the medium being measured (liquid or gas). Calibration and periodic maintenance are also important to ensure accurate and reliable gauge pressure measurements.

These methods allow engineers, technicians, and scientists to measure gauge pressure accurately, making them useful in various applications such as process control, HVAC systems, hydraulic systems, and industrial processes.

Fig 7. Different between absolute and gauge pressure

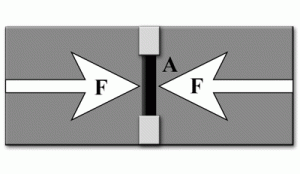

How can measure the Differential Pressure?

Measuring differential pressure involves determining the pressure difference between two points in a system. Here are a few common methods used to measure differential pressure:

- Differential Pressure Gauges: Differential pressure gauges are specifically designed to measure the pressure difference between two points. They consist of two pressure-sensing elements, each connected to one of the two points in the system. The sensing elements can be diaphragms, Bourdon tubes, or other pressure-sensitive mechanisms. The difference in pressure causes the sensing elements to deflect, and the resulting displacement is displayed on the gauge. Differential pressure gauges are available in analog and digital forms, providing a direct reading of the pressure difference.

- Manometers: Manometers can also be used to measure differential pressure. U-tube manometers or inclined manometers are commonly employed for this purpose. In a U-tube manometer, a U-shaped tube is partially filled with a liquid, such as water or mercury. The two ends of the U-tube are connected to the two points where the differential pressure is to be measured. The difference in liquid levels in the two arms of the U-tube represents the differential pressure. The measurement can be read directly from the scale on the manometer.

- Differential Pressure Transmitters: Differential pressure transmitters utilize electronic sensors and are commonly used in industrial and automation applications. They consist of two pressure-sensing elements connected to the two points where the differential pressure needs to be measured. The sensors convert the pressure difference into an electrical signal, which is then amplified, processed, and transmitted to a control system or display. Differential pressure transmitters can provide continuous, accurate readings of the pressure difference.

- Pitot Tubes: Pitot tubes are commonly used to measure the differential pressure between the static pressure and the dynamic pressure of a fluid flow. They are often used in applications such as measuring airspeed in aircraft or flow rate in pipes. A pitot tube has a small opening facing the flow, which measures the dynamic pressure, while another opening perpendicular to the flow measures the static pressure. The differential pressure between these two measurements is used to calculate the flow rate or airspeed.

The choice of method depends on factors such as the pressure range, accuracy requirements, environmental conditions, and the specific application. It is important to select an appropriate instrument or technique and calibrate it if necessary to ensure accurate and reliable differential pressure measurements.

By utilizing these methods, engineers, technicians, and scientists can accurately measure and monitor differential pressure in various applications, including flow measurement, filtration systems, HVAC systems, and industrial processes.

Fig 8. Differential Pressure

Differential Pressure Formula

The formula to calculate differential pressure depends on the specific context and the units being used. Here are a few common formulas for calculating differential pressure:

- Differential Pressure (ΔP) between two points: The differential pressure between two points in a fluid system can be calculated using the following formula:

ΔP = P2 - P1

Where: ΔP = Differential Pressure P2 = Pressure at Point 2 P1 = Pressure at Point 1

This formula calculates the difference between the pressures at the two points, providing the value of the differential pressure.

- Differential Pressure (ΔP) across a flow restriction: In fluid flow applications, the differential pressure across a flow restriction, such as an orifice plate or a venturi meter, can be calculated using the following formula:

ΔP = K * (ρ * V^2) / 2

Where: ΔP = Differential Pressure K = Coefficient specific to the flow restriction ρ = Density of the fluid V = Velocity of the fluid

This formula utilizes the density and velocity of the fluid along with the specific coefficient for the flow restriction to calculate the differential pressure across it.

It's important to note that these formulas provide simplified representations of differential pressure calculations, and specific factors and coefficients may vary depending on the system, units, and conditions. Additionally, units of pressure must be consistent throughout the calculation (e.g., pascals, pounds per square inch, etc.).

When working with differential pressure, it is recommended to consult the specific equations, standards, or guidelines relevant to the particular application or measurement device being used to ensure accurate and reliable calculations.

Example of the Differential Pressure formula

Certainly! Here's an example of a differential pressure formula calculation:

Let's consider a scenario where you have two pressure measurements, P1 and P2, taken at two different points in a fluid system. You want to calculate the differential pressure between these two points.

Assuming the measured pressure at Point 1 (P1) is 50 psi and the pressure at Point 2 (P2) is 40 psi, you can use the formula:

ΔP = P2 - P1

Substituting the given values:

ΔP = 40 psi - 50 psi

ΔP = -10 psi

The result is a negative value, indicating that the pressure at Point 2 is lower than the pressure at Point 1 by 10 psi. This indicates a pressure drop or a differential pressure across the system.

Remember to ensure consistent units of pressure throughout the calculation to obtain accurate results. Also, note that this is a simplified example, and in practical applications, there might be additional factors or conversions involved based on the specific system and units being used.

What are the two ways of measuring Differential Pressure?

There are generally two primary ways of measuring differential pressure:

- Direct Measurement:

Direct measurement of differential pressure involves using a device specifically designed to directly measure the pressure difference between two points. This can be achieved using instruments such as differential pressure gauges, differential pressure transmitters, or manometers. These devices have two sensing elements or ports connected to the two points where the pressure difference is to be measured. They directly measure and display the differential pressure or provide an electrical output proportional to the pressure difference. Direct measurement methods provide real-time readings of the differential pressure and are commonly used in various applications.

- Indirect Calculation:

Indirect calculation of differential pressure involves measuring individual pressures at two points and then calculating the difference between them. In this approach, separate pressure measurements are taken at the two points using pressure sensors or gauges, such as pressure transducers or pressure gauges. These individual pressure measurements are then used in calculations to determine the differential pressure. The calculation can be as simple as subtracting one pressure from another (P2 - P1) or may involve more complex equations or conversions depending on the specific context.

Both direct measurement and indirect calculation methods have their advantages and are used depending on the application requirements. Direct measurement provides instantaneous readings and is suitable for real-time monitoring and control, while indirect calculation allows flexibility in terms of equipment choices and can be more cost-effective in certain scenarios. The choice between the two methods depends on factors such as accuracy requirements, application constraints, and available instrumentation.

Vacuum pressure

Vacuum pressure refers to the pressure measurement below atmospheric pressure. It is the pressure exerted by a gas or partial vacuum relative to the ambient atmospheric pressure. Vacuum pressure is typically expressed as a negative value or represented as a pressure lower than atmospheric pressure.

In a vacuum, the pressure is lower than the surrounding atmospheric pressure due to the absence or reduction of gas molecules. The level of vacuum pressure is measured in various units, such as pascals (Pa), millibars (mb), torr, or inches of mercury (inHg).

Vacuum pressure finds applications in several fields:

- Vacuum Systems: Vacuum pressure is essential for the operation of vacuum systems used in various industrial processes, scientific research, and manufacturing applications. These systems create controlled low-pressure environments by reducing the gas pressure to create a vacuum for specific purposes, such as removing air and moisture, preventing contamination, or enabling chemical reactions under reduced pressure conditions.

- Vacuum Technology: Vacuum pressure plays a crucial role in vacuum technology, which involves the design, development, and application of vacuum systems and components. It is employed in areas such as vacuum pumps, vacuum chambers, and vacuum instrumentation, enabling advancements in fields like electronics, material science, and surface engineering.

- Physics and Chemistry Experiments: Vacuum pressure is commonly used in physics and chemistry experiments, particularly those involving low-pressure conditions. These experiments require creating a vacuum to eliminate unwanted gas interactions, study gas behaviors, explore particle interactions, or investigate phenomena at extremely low-pressure levels.

- Space Technology: In the context of space exploration, vacuum pressure is encountered in the vacuum of space itself. The absence of atmospheric pressure necessitates the development and utilization of vacuum conditions in space-related technologies, including propulsion systems, life support systems, and the testing of space hardware.

It's important to note that vacuum pressure is often categorized into different levels, such as low vacuum, medium vacuum, high vacuum, and ultra-high vacuum (UHV), depending on the specific pressure range being considered.

Overall, vacuum pressure is a crucial concept in various scientific, technological, and industrial domains, allowing for the creation of controlled low-pressure environments and enabling a wide range of applications and research.

Fig 9. Vacuum pressure

What is a vacuum pressure used for?

Vacuum pressure is used in various applications across different industries. Here are some common uses of vacuum pressure:

- Industrial Processes: Vacuum pressure plays a crucial role in many industrial processes. It is used in vacuum distillation, where lower pressure lowers the boiling point of liquids, allowing for more efficient separation and purification of substances. Vacuum pressure is also employed in vacuum drying, vacuum impregnation, and vacuum packaging to remove moisture, increase product shelf life, and enhance product quality.

- Manufacturing: Vacuum pressure is utilized in manufacturing processes such as vacuum molding or vacuum forming. In these processes, a heated material is placed over a mold, and vacuum pressure is applied to draw the material onto the mold's shape. This allows for the production of complex shapes and precision molding.

- Electronics and Semiconductor Industry: The electronics and semiconductor industries extensively use vacuum pressure in various manufacturing processes. Vacuum pressure is utilized in thin film deposition, where layers of material are deposited onto substrates to create electronic components or semiconductor devices. It is also used in vacuum chambers for testing and manufacturing electronic components to prevent contamination and ensure proper functioning.

- Scientific Research: Vacuum pressure is essential in scientific research, particularly in physics, chemistry, and material science. Vacuum chambers are used to create low-pressure environments for experiments involving controlled conditions, such as studying the behavior of gases, and plasma, or conducting specific chemical reactions in the absence of air.

- Space Technology: Vacuum pressure is fundamental in space exploration and satellite technology. In space, the absence of atmospheric pressure necessitates the use of vacuum conditions to simulate the environment and test the performance and behavior of components and materials in space-like conditions. Vacuum is also used in propulsion systems, such as ion thrusters, for generating thrust in the vacuum of space.

- Analytical Instruments: Many analytical instruments, such as mass spectrometers, electron microscopes, and surface analysis equipment, require vacuum pressure for accurate and reliable measurements. Maintaining a vacuum in these instruments helps prevent interference from air molecules and enables precise analysis of samples.

Overall, vacuum pressure is widely utilized in various industries and scientific fields for manufacturing, research, testing, and creating controlled environments. Its applications range from industrial processes to cutting-edge technologies, highlighting its importance across multiple disciplines.

How can use vacuum pressure as a pressure reference?

Vacuum pressure can be used as a pressure reference by establishing it as a baseline or reference point for pressure measurements. Here's how vacuum pressure can be utilized as a pressure reference:

- Differential Pressure Measurement: Vacuum pressure can serve as a reference point for measuring differential pressure. By comparing the pressure at a specific location or within a system to the vacuum pressure, the differential pressure can be determined. This is commonly used in applications such as leak testing, where the pressure difference between the test object and the vacuum reference is measured.

- Calibration Standards: Vacuum pressure can be utilized as a reference for calibrating pressure measurement instruments. Calibration standards, such as vacuum calibration standards, are devices that provide a known and stable vacuum pressure. Instruments can be calibrated against these standards to ensure accuracy and traceability in pressure measurements.

- Absolute Pressure Calculation: Absolute pressure can be calculated by adding the vacuum pressure to the measured pressure. Absolute pressure is the total pressure relative to a perfect vacuum. By incorporating the vacuum pressure as a reference, measurements can be adjusted to account for the absence of pressure in a vacuum.

- Vacuum Chambers: Vacuum chambers are often used as a reference for pressure measurements. They create a controlled environment with a specified vacuum pressure. Pressure measurements taken within the vacuum chamber can be compared to the known vacuum pressure to assess the pressure conditions accurately.

When using vacuum pressure as a reference, it's important to consider factors such as calibration, atmospheric pressure correction, and proper equipment selection. Calibration against established standards and adherence to measurement protocols are essential for maintaining accuracy and reliability in pressure measurements.

By utilizing vacuum pressure as a reference, pressure measurements can be made relative to the absence of pressure in a vacuum, providing a baseline for accurate and meaningful comparisons.

Vacuum pressure formula

The vacuum pressure formula calculates the pressure relative to a perfect vacuum or absolute zero pressure. The formula for vacuum pressure depends on the context and the units being used. Here are a few common formulas for calculating vacuum pressure:

- Absolute Pressure Formula: Vacuum pressure is often represented as a negative value relative to atmospheric pressure. The formula to calculate vacuum pressure from absolute pressure is

Vacuum Pressure = Atmospheric Pressure - Absolute Pressure

- Conversion to Pascal or Torr: If you have the vacuum pressure in another unit, such as millibars (mb) or inches of mercury (inHg), you can convert it to pascals (Pa) or torr using conversion factors:

1 millibar (mb) = 100 pascals (Pa) 1 inch of mercury (inHg) = 25.4 millibars (mb) = 3386.39 pascals (Pa) = 25.4 torr

By converting the vacuum pressure to pascals or torr, you can work with a consistent unit for further calculations or comparisons.

It's important to note that the vacuum pressure formula may vary depending on the specific application and the units used. Additionally, be aware that pressure measurements require proper calibration and consideration of factors such as altitude, temperature, and the specific properties of the gas or fluid being measured.

When working with vacuum pressure calculations, consult relevant standards, guidelines, or specific equations based on your application and ensure consistency in units and reference points for accurate and meaningful results.

Fig 10. Pressure scale

What is a difference between absolute and vacuum pressure?

The main difference between absolute pressure and vacuum pressure lies in their reference points and the presence or absence of pressure. Here's a breakdown of the differences:

Absolute Pressure: Absolute pressure refers to the total pressure exerted by a fluid or gas, including the pressure of the fluid itself as well as the pressure of the surrounding atmosphere. It is measured relative to absolute zero pressure or a perfect vacuum. Absolute pressure includes atmospheric pressure as part of its measurement. For example, if the atmospheric pressure is 1 atmosphere (atm), an absolute pressure measurement of 2 atm means that the pressure is twice that of the surrounding atmospheric pressure.

Vacuum Pressure: Vacuum pressure, on the other hand, is a measure of pressure below atmospheric pressure or the absence of pressure. It refers to pressures lower than the atmospheric pressure at a specific location. Vacuum pressure is often expressed as a negative value relative to atmospheric pressure. The greater the vacuum pressure, the lower the pressure compared to atmospheric pressure. A perfect vacuum would have a vacuum pressure of absolute zero or no pressure at all.

In summary, the key distinction between absolute pressure and vacuum pressure is that absolute pressure includes atmospheric pressure in its measurement, while vacuum pressure refers specifically to pressures below atmospheric pressure. Absolute pressure serves as a reference for pressure measurements, whereas vacuum pressure indicates pressure below ambient atmospheric conditions.

Conclusion

In conclusion, pressure is an essential physical quantity that is measured and utilized in various fields and industries. Understanding different types of pressure references, such as atmospheric pressure, absolute pressure, gauge pressure, and differential pressure, allows for accurate measurements and meaningful comparisons.

Atmospheric pressure represents the pressure exerted by Earth's atmosphere at a specific location and finds applications in weather forecasting, altitude determination, aviation, and HVAC systems, among others.

Absolute pressure refers to the pressure measured relative to absolute zero pressure or a perfect vacuum. It serves as a reference point for pressure measurements and is used in calibration standards, vacuum systems, and scientific research.

Gauge pressure is measured relative to atmospheric pressure and is commonly used in tire pressure, hydraulic systems, and closed vessel measurements.

Differential pressure is the pressure difference between two points in a system and is employed in various applications such as flow rate calculations, filter monitoring, and HVAC systems.

Different methods and instruments are used to measure these types of pressure, including barometers, transducers, manometers, and digital gauges.

By understanding and utilizing different pressure references, engineers, technicians, scientists, and researchers can accurately measure and monitor pressure, enabling advancements in various industries, scientific research, and technological applications.

To recap

What is pressure?

Pressure is the force exerted on a surface per unit area. It is typically measured in units such as pascals (Pa), pounds per square inch (psi), or atmospheres (atm).

How is pressure measured?

Pressure is measured using instruments such as pressure gauges, transducers, or manometers. These instruments detect the force applied to a sensing element and convert it into a pressure reading.

What is the difference between absolute pressure and gauge pressure?

Absolute pressure is measured relative to a perfect vacuum or absolute zero pressure, while gauge pressure is measured relative to atmospheric pressure. Absolute pressure includes atmospheric pressure, whereas gauge pressure does not.

What is differential pressure?

Differential pressure is the difference in pressure between two points in a system. It is often used to measure flow rates, filter efficiency, and pressure drops across devices.

How does atmospheric pressure change with altitude?

Atmospheric pressure decreases with increasing altitude. The rate of decrease depends on factors such as temperature and weather conditions. On average, atmospheric pressure decreases by about 1 atmosphere (atm) for every 10 meters of altitude gained.

What is the relationship between pressure and temperature?

According to the ideal gas law, for a fixed amount of gas at constant volume, the pressure of a gas is directly proportional to its temperature. When temperature increases, pressure tends to increase as well, and vice versa.

Can pressure be negative?

In some contexts, pressure can be negative relative to a reference point. For example, vacuum pressure is often expressed as a negative value, indicating pressure below atmospheric pressure.

What is the difference between pressure and stress?

Pressure refers to the force per unit area applied to an object or surface, while stress is a measure of the internal resistance or force within a material. Stress is typically expressed in terms of force per unit area and is used to assess the strength and deformation of materials.

How can pressure be converted between different units?

Pressure can be converted between different units using conversion factors. For example, 1 atmosphere (atm) is approximately equal to 101,325 pascals (Pa) or 14.7 pounds per square inch (psi).

Why is pressure important in everyday life?

Pressure is important in everyday life as it influences numerous phenomena and systems. It affects weather patterns, tire performance, fluid flow, breathing, and even cooking. Understanding and managing pressure allows us to design efficient systems, measure critical parameters, and ensure safety in various applications.

References

https://www.tabertransducer.com/pressure-references/

https://blog.dwyer-inst.com/2017/09/27/what-is-pressure-and-how-is-it-referenced/

Recent Posts

-

Booster Pump Troubleshooting and Maintenance: How to Fix and Prevent Common Issues

1. Introduction Imagine turning on your faucet only to be greeted with a weak trickle of water when …22nd Apr 2025 -

Energy-Efficient Booster Pumps: Selection and Tips for Maximizing Performance

1. Introduction Imagine never having to deal with fluctuating water pressure, noisy pumps, or skyroc …19th Apr 2025 -

Booster Pumps for Sustainable Water Systems: Irrigation and Rainwater Harvesting Solutions

1. Introduction Water scarcity is no longer a distant threat—it’s a reality affecti …16th Apr 2025