The Fine Line of Accuracy: Demystifying Pressure Sensor Error Specifications

Understanding accuracy and error specifications is crucial when working with sensors. These specifications determine how reliably a sensor measures and reports values, influencing its suitability for specific tasks. Accuracy represents how close a sensor's measurements are to the actual values being measured. Error specifications outline the permissible deviation from these true values.

Various factors impact accuracy, including calibration, environmental conditions, hysteresis, and manufacturing variations. Calibration ensures a sensor's readings align with true values, while environmental factors like temperature and humidity can affect performance.

Evaluating accuracy involves multiple methods such as comparison with reference standards, analyzing linearity, hysteresis, and repeatability. This assessment ensures a comprehensive understanding of a sensor's performance characteristics.

Precision, distinct from accuracy, focuses on a sensor's ability to produce consistent measurements. Understanding these specifications aids in selecting sensors best suited for specific applications, ensuring reliable and precise measurements.

Fig 1. Pressure sensor accuracy

What is pressure sensor accuracy?

Pressure sensor accuracy refers to how closely the output of a pressure sensor matches the actual pressure being measured. It's a measure of how reliable and precise the sensor is in determining the correct pressure.

Accuracy is typically represented as a percentage of full-scale output (%FSO) or in absolute terms such as in psi (pounds per square inch) or kPa (kilopascals). For instance, a pressure sensor with an accuracy of ±1% FSO means that the sensor's reading can deviate by up to 1% from the actual pressure being measured across the full range of the sensor.

Factors affecting pressure sensor accuracy include:

How can measure the pressure sensor accuracy?

Measuring the accuracy of a pressure sensor involves comparing its output readings to a known reference standard or a more accurate sensor in controlled conditions. Here are steps to measure pressure sensor accuracy:

Fig 2. Accuracy formula

What are the different methods of pressure sensor accuracy?

Pressure sensor accuracy can be assessed through various methods, each offering insights into different aspects of the sensor's performance. Here are some common methods used to evaluate pressure sensor accuracy:

What are the error specifications for pressure sensors?

Error specifications for pressure sensors refer to the allowable deviations or inaccuracies in the sensor's output readings compared to the actual pressure being measured. These specifications outline the acceptable limits within which the sensor's readings are considered accurate and reliable.

Pressure sensor error specifications typically include different types of errors:

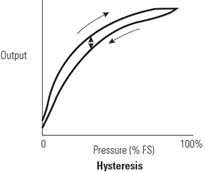

Hysteresis

The hysteresis in pressure sensors refers to the phenomenon where the sensor's output value for a particular pressure varies depending on whether the pressure is increasing or decreasing. Essentially, the sensor cannot return to the same output value for a given pressure when pressure is cycled up and then down again. When the pressure is gradually increased and then decreased along the same path, the output of the sensor might follow a different curve, resulting in a discrepancy between the ascending and descending pressure values.

This effect occurs due to the mechanical or material properties within the sensor. Elements like the elastic behavior of the sensing material or friction in the sensor's mechanical components can cause this deviation. Hysteresis can impact the accuracy of pressure measurements, particularly in applications where precise pressure control or consistency in readings is crucial. To mitigate its effects, calibration techniques or compensation algorithms are often employed to minimize the discrepancy and enhance the sensor's accuracy by accounting for this non-linear behavior. Understanding a sensor's hysteresis allows users to consider this characteristic in their applications and make appropriate adjustments to ensure accurate pressure readings.

Fig 3. Hysteresis

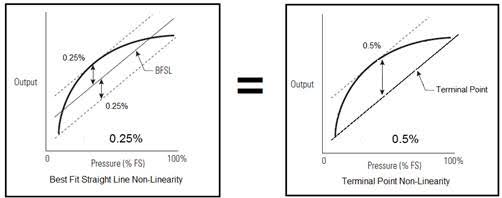

Pressure sensor linearity and accuracy

Pressure sensor linearity and accuracy are critical aspects of its performance. Linearity refers to how well the sensor's output correlates with changes in pressure across its operating range. A perfectly linear sensor would produce output values that precisely follow a straight line when plotted against pressure. However, real-world sensors may exhibit slight deviations from linearity due to inherent limitations in materials, design, or manufacturing processes. Linearity errors manifest as deviations from the ideal linear relationship between pressure input and sensor output.

Accuracy, on the other hand, encompasses the overall correctness of the sensor's measurements compared to the true or expected pressure values. It considers various factors such as systematic errors, calibration precision, and environmental influences. Accuracy reflects how closely the sensor's readings match the actual pressure being measured. While linearity focuses on the consistency of the sensor's response across its range, accuracy evaluates the sensor's performance in providing correct measurements relative to the true pressure values, considering both linearity errors and other potential sources of inaccuracies.

In practice, achieving high accuracy involves minimizing both linearity errors and other sources of deviation, such as calibration inaccuracies, hysteresis, temperature variations, and environmental factors. Manufacturers often specify accuracy as a percentage of full-scale output (%FSO), indicating the maximum deviation between the sensor's output and the actual pressure. Maintaining linearity within acceptable limits while ensuring high accuracy is crucial, especially in applications where precise pressure readings are essential for safety, control systems, or critical measurements.

Fig 4. Non-linearity and terminal point

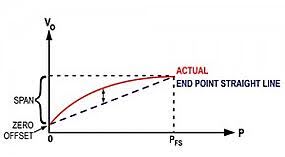

How can measure pressure sensor accuracy with End Point Methods?

Endpoint methods are a class of techniques used to evaluate pressure sensor accuracy by focusing on the sensor's output at specific pressure points within its operating range. These methods involve comparing the sensor's output at the lower and upper ends of the pressure range with a reference standard or a known value. Here's how you can measure pressure sensor accuracy using end-point methods:

Fig 5. Endpoint method

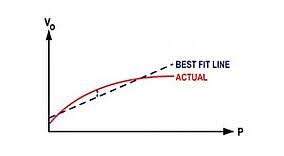

BFSL Method to measure pressure sensor accuracy

BFSL stands for "Best Fit Straight Line," a method used to determine the accuracy of pressure sensors by assessing their linearity within a specified pressure range. The goal of the BFSL method is to evaluate how well the sensor's output aligns with a straight line when plotted against the actual applied pressures within its operating range.

Here are the steps involved in using the BFSL method to measure pressure sensor accuracy:

Fig 6. Best Fit Straight Line (BFSL) method

What does BSL stand for?

BSL commonly stands for "Best Straight Line," a method used to assess the linearity of pressure sensors or transducers. This method involves evaluating how closely the sensor's output matches a straight line when plotted against the actual applied pressures within its operating range.

The working principle of the BSL method involves:

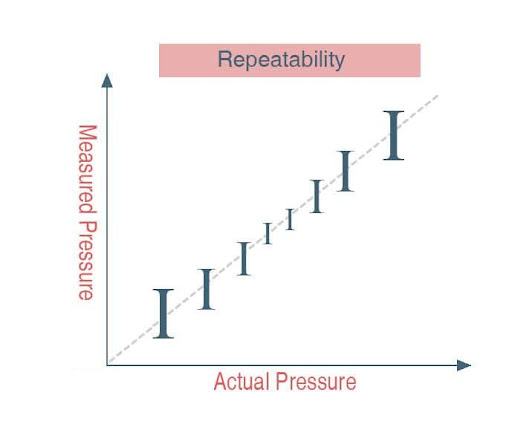

The repeatability of the pressure sensor

Repeatability, in the context of pressure sensors, refers to the sensor's ability to produce consistent and near-identical output readings when exposed to the same or similar pressure conditions under unchanged environmental and operational circumstances. It assesses the sensor's reliability in reproducing consistent results when subjected to repeated measurements at a specific pressure point or within a defined pressure range. A highly repeatable sensor will yield nearly the same output readings when the same pressure is applied multiple times, highlighting its stability and consistency in measurement.

To evaluate repeatability, engineers conduct multiple trials where they apply the same pressure to the sensor repeatedly, ensuring that external factors such as temperature, humidity, and other environmental conditions remain constant. By comparing the output readings obtained from each trial, the degree of variation or deviation among the readings is analyzed. Lower variation indicates higher repeatability, signifying that the sensor can consistently reproduce its output readings, which is crucial for applications requiring precision and reliability in pressure measurements, especially in critical or controlled environments.

Fig 7. Repeatability

Long-Term Stability

It Indicates the amount of deviation in the amount of pressure measured by the sensor under normal conditions over a specified period.

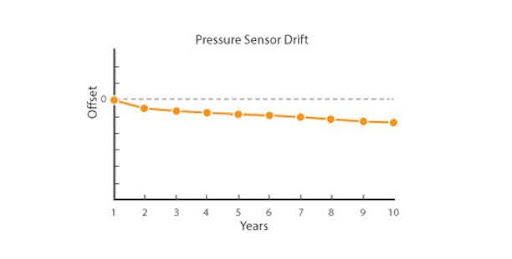

Drift

It is defined as a percentage of full scale over a while normally 12 months. Drift is the gradual destruction or change of the components which causes them to deviate from their initial calibration.

The drift causes the accuracy of the pressure sensor or transducer to decrease over time, and the sensor provides unreliable readings and measurements.

Fig 8. Pressure sensor drift

Which method of measuring pressure sensor accuracy is the most accurate?

There isn't a singular "most accurate" method for measuring pressure sensor accuracy, as different methods offer unique insights into different aspects of sensor performance. The most suitable method often depends on the specific requirements of the application and the characteristics being evaluated.

Several methods are commonly used:

Interpreting accuracy specifications for different pressure ranges

Interpreting accuracy specifications for pressure sensors across different pressure ranges involves understanding how the sensor's accuracy might vary based on the applied pressure within its operating limits. Manufacturers often provide accuracy specifications at different points or ranges within the sensor's full-scale range.

For instance:

Additionally, understanding whether accuracy specifications are absolute or include factors such as linearity, hysteresis, or repeatability can provide a clearer picture of the sensor's performance under various pressure conditions. Comparing accuracy specifications from different manufacturers or models can aid in selecting the most suitable sensor for a particular application based on its accuracy requirements across different pressure ranges.

Example

Gems sensors 3100 Series Pressure Transducers specify their accuracy to be 0.25% FS (Full Scale) or less.

Full Scale is the value from when there is no pressure on the sensor to what its maximum measuring range is, not the measured pressure.

For example:

For a sensor with a 0 to 100 psi measuring range, the Full Scale is 100 psi.

If you are measuring 100 psi exactly, the output should read 100 psi +/- 0.25% of 100 psi or 100 psi +/- 0.25 psi.

Still using the 0 to 100 psi measuring range but you are only measuring 10 psi, the accuracy of the output should be 10 psi +/- 0.25% of 100 psi (Full Scale) or 10 psi +/- 0.25 psi.

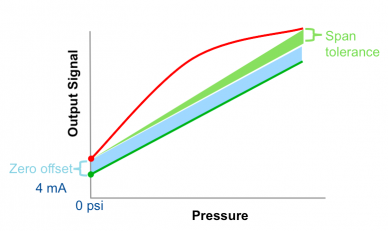

Zero Offset

Zero Offset is defined as the amount of variation in output from the ideal value at the lowest point of the measurement range.

It is expressed as a percentage of the full span or in measurement or signal units such as millivolts or milliamps. Typically there are separate items for indicating zero offsets on a pressure sensor.

Fig 9. Zero offset

What are the factors that affect accuracy?

Several factors can affect the accuracy of pressure sensors, influencing their ability to provide precise and reliable pressure measurements:

Referred Temperature Error

Referred Temperature Error or RTE is expressed as the maximum deviation in the positive or negative direction from measurements taken at a defined temperature, which is typically room temperature. It is as a percentage of the full scale.

Temperature Compensation

Temperature Compensation is a correction applied to a measurement instrument to reduce errors attributed to temperature changes in a process media that is being measured or in the surrounding environment in which the instrument is being used.

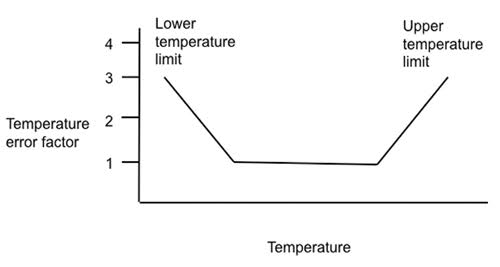

Temperature Error

Temperature is one of the most important factors in the correctness of pressure sensors and has a great influence on the accuracy performance of a pressure sensor.

The temperature error arises from the deviation of the measurement due to variations in the device or the ambient temperature.

It is usually expressed as a maximum error value of all possible measurements and determined on a maximum and minimum temperature named the compensated temperature range and does not necessarily express the range of operating temperatures that are often wider than the compensated temperature range for the pressure sensor.

By use of circuitry design and algorithms temperature errors within this temperature range are decreased. Outside the compensated temperature range, the maximum error is not defined outside of the compensated temperature range but the pressure sensor still operates.

Threshold temperature ranges are determined in the technical datasheet. Pressure sensors may not operate beyond the threshold temperature because it leads to mechanical and electrical damage.

Temperature error is usually expressed as a percentage of full span (% FS) over the entire compensated temperature range or as a percentage of full span (% FS) per degree Celsius, Kelvin, or Fahrenheit.

Fig 10. Sensor temperature error factors

Conclusion

Accurate pressure measurements are crucial across various industries and applications, and pressure sensor accuracy is influenced by multiple factors. Understanding the intricacies of pressure sensor accuracy involves considering calibration, environmental conditions, mechanical stress, and non-linear behaviors like hysteresis and non-linearity. Each of these factors can impact the sensor's ability to provide precise and reliable readings.

Different methods, such as comparison with reference standards, endpoint measurements, or linearity assessments, offer ways to evaluate and quantify a sensor's accuracy. However, no single method can fully capture all aspects of accuracy. Combining multiple evaluation techniques provides a more comprehensive understanding of a pressure sensor's performance.

Manufacturers strive to minimize these factors' effects by employing precise manufacturing processes, incorporating compensation mechanisms, and providing calibration procedures. Regular recalibration and maintenance are crucial for sustaining accurate readings over time.

Ultimately, selecting the right pressure sensor for a specific application requires considering accuracy specifications, understanding how those specifications apply across different pressure ranges, and aligning them with the application's requirements.

By acknowledging and addressing these factors affecting accuracy, users can make informed decisions, ensuring that pressure sensors deliver the needed precision and reliability for their intended purposes.

To recap

1.What does accuracy mean for a sensor? Accuracy refers to how closely a sensor's measurements match the actual value being measured

2.How is accuracy typically expressed for sensors? Accuracy is often expressed as a percentage of full-scale output (%FSO) or in absolute units (e.g., psi, bar) representing the maximum permissible deviation from the true value.

3.What factors affect a sensor's accuracy? Factors include calibration, environmental conditions, hysteresis, linearity, mechanical stress, manufacturing variations, electronic noise, aging, and wear.

4.What is hysteresis about accuracy? Hysteresis is the phenomenon where a sensor's output differs for the same value depending on whether the input is increasing or decreasing. It can affect accuracy by introducing non-linear behavior.

5.How do you evaluate a sensor's accuracy? Evaluation involves various methods such as comparison with reference standards, endpoint measurements, linearity assessments, and analyzing hysteresis and repeatability.

6.What is the difference between accuracy and precision? Accuracy refers to how close a measurement is to the true value, while precision relates to the repeatability and consistency of measurements.

7.Why is calibration important for maintaining accuracy? Calibration ensures that a sensor's readings align with the true value. Over time, sensors may drift, and calibration helps bring them back to an accurate state.

8.How do environmental conditions impact sensor accuracy? Temperature, humidity, altitude variations, and other environmental factors can influence a sensor's performance and accuracy.

9.Can a sensor have different accuracy specifications at different pressure ranges? Yes, sensors might have varied accuracy specifications across different pressure ranges within their operating limits.

10.Why is understanding a sensor's accuracy crucial for selecting the right sensor? Understanding accuracy specifications helps in choosing a sensor that meets the precision requirements of the specific application, ensuring reliable measurements.

These FAQs provide a foundational understanding of accuracy and error specifications for sensors, assisting users in making informed decisions when selecting and utilizing sensors for diverse applications.

References

https://dwyer-inst.com/en/list/post/understanding-pressure-sensor-accuracy

https://www.setra.com" rel="nofollow"/blog/accuracy-end-point-vs-best-fit-straight-line-method/

https://www.eastsensor.com" rel="nofollow"/blog/pressure-sensor-repeatability/

Recent Posts

-

Booster Pump Troubleshooting and Maintenance: How to Fix and Prevent Common Issues

1. Introduction Imagine turning on your faucet only to be greeted with a weak trickle of water when …22nd Apr 2025 -

Energy-Efficient Booster Pumps: Selection and Tips for Maximizing Performance

1. Introduction Imagine never having to deal with fluctuating water pressure, noisy pumps, or skyroc …19th Apr 2025 -

Booster Pumps for Sustainable Water Systems: Irrigation and Rainwater Harvesting Solutions

1. Introduction Water scarcity is no longer a distant threat—it’s a reality affecti …16th Apr 2025