Calibration Unmasked: The Key to Reliable Pressure Readings

Calibration, though often overlooked, serves as the silent hero ensuring the accuracy and reliability of the measurements that shape our world. Picture this: precision instruments in labs, factories, and even within medical devices; their ability to deliver accurate data hinges on calibration. It's the behind-the-scenes process ensuring your car's speedometer reads true, your phone's barometer predicts weather faithfully, and your health monitoring devices provide reliable insights.

Pressure sensor calibration, within this realm, holds particular significance. Pressure sensors, integral to industries spanning aviation, healthcare, manufacturing, and more, demand absolute precision. Their ability to gauge pressure accurately impacts safety, quality control, and performance. From deadweight testers ensuring precision in labs to cutting-edge electrical simulations refining sensor accuracy, pressure sensor calibration methods are the unsung heroes behind dependable pressure measurements.

Understanding these calibration methods not only demystifies their importance but also unveils the intricate processes ensuring the seamless functioning of our modern world. Join the journey through these calibration methods and witness the meticulous techniques ensuring accuracy in the very measurements we rely on daily.

Fig 1. Pressure gauge manual calibration

How can calibrate the pressure sensor?

Calibrating a pressure sensor typically involves several steps to ensure its accuracy and reliability:

Calibration methods

Sure, calibration methods can vary depending on the type of instrument being calibrated and the desired level of accuracy. Here are several common calibration methods used across different industries:

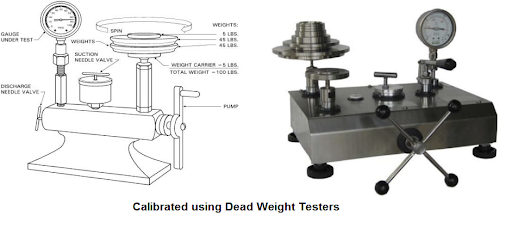

Deadweight Testers

Deadweight testers are precise instruments used for calibrating pressure sensors by applying known pressures. The core of a deadweight tester is a piston-cylinder assembly. The piston rests on a precision-machined cylinder and is loaded with calibrated weights. When the weights exert force on the piston, it generates a known pressure within the system. This pressure is then transmitted through a fluid (usually oil or water) to the sensor being calibrated. By carefully controlling the weights and the area of the piston, deadweight testers can produce highly accurate and stable pressures for calibration purposes. These testers are often used in laboratories or calibration facilities due to their high accuracy and reliability.

Steps for using a deadweight tester in pressure sensor calibration:

Fig 2. Calibrated using dead weight testers

Visual Inspection methods

Visual inspection is a fundamental method used to assess the physical condition of an instrument or equipment. It involves a thorough examination of the device's external components, looking for any visible signs of damage, wear, or irregularities that could affect its functionality or accuracy. During this process, technicians inspect the instrument for scratches, dents, corrosion, loose parts, or any other visible flaws that might compromise its performance. Additionally, they check for proper labeling, serial numbers, and overall cleanliness, ensuring that the instrument meets safety and quality standards.

The inspection process often follows a checklist that outlines specific areas to examine. This could include verifying the integrity of connectors, cables, or probes, examining display screens or indicators for readability and functionality, and assessing the general structural integrity of the instrument. Visual inspection serves as an initial step before more comprehensive calibration procedures, helping identify any obvious issues that might require repair or further attention. Regular visual inspections are crucial for preventive maintenance, prolonging the lifespan of instruments and ensuring their accurate and safe operation within various industries.

How can use this method?

Performing a visual inspection involves a systematic examination of an instrument or equipment. Here's a step-by-step guide on how to conduct a visual inspection:

Fig 3. Multimeter calibration

Functional Check methods

A functional check is a critical step in ensuring that an instrument or device operates as intended and meets its performance requirements. To conduct a functional check, start by reviewing the manufacturer's documentation or user manual to understand the standard operations and functionalities of the instrument. This provides a guideline for the specific tests you need to perform.

Once you're familiar with the expected functionalities, proceed with the functional check. This typically involves systematically testing various components and features of the instrument. For example, if it's a temperature sensor, verify its ability to accurately measure temperatures within its specified range. If it's a digital multimeter, test its voltage, current, and resistance measurement capabilities. Use known inputs or standards to verify the instrument's readings against expected values, if available.

During the functional check, pay attention to any irregularities or deviations from expected performance. Test different modes, settings, or operational features as outlined in the user manual. Ensure buttons, switches, displays, and interfaces work as intended. Note any errors, glitches, or inconsistencies encountered during the testing process. Functional checks are crucial to identify potential issues with the instrument's operation, allowing for necessary adjustments, repairs, or calibration to ensure accurate and reliable performance. Regular functional checks as part of a maintenance schedule help catch problems early and maintain the instrument's functionality.

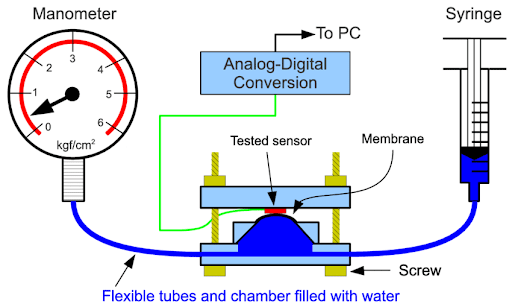

Zero and Span Adjustment

Zero and span adjustment is a calibration process used to ensure that instruments or sensors accurately measure within their specified range. The "zero" refers to the point where the instrument should read zero when there's no input or stimulus, while the "span" represents the full measurement range of the instrument.

During zero adjustment, the instrument is calibrated to read zero when there's no input or when the measured quantity is at its minimum. This adjustment ensures that the instrument doesn't exhibit any offset or bias when it should be registering no measurement. Span adjustment involves setting the instrument to accurately measure across its entire range, ensuring that it provides accurate readings at both minimum and maximum values within that range. Calibration technicians often use reference standards or known inputs to adjust these settings, making precise modifications to align the instrument's output with the expected values at zero and span points.

The zero and span adjustments are critical for maintaining the accuracy and reliability of instruments. These adjustments help eliminate errors such as drift or offset that can occur over time, ensuring that the instrument provides accurate measurements throughout its operational range. Regular recalibration of zero and span points is essential to account for any changes or deviations that might affect the instrument's performance.

Step by step guide for Zero and Span Adjustment method

Here's a step-by-step guide for zero and span adjustment in calibration:

Fig 4. Calibration system of pressure sensor

What are important tips about Zero and Span Adjustment?

Here are some important tips to consider when performing zero and span adjustment during calibration:

Comparative Calibration method

Comparative calibration is a method used to calibrate instruments by comparing their readings against a reference standard or a device with higher accuracy that has been previously calibrated. This method involves placing the instrument being calibrated and the reference standard in similar conditions and then measuring the same input or quantity with both devices. The goal is to determine any deviations or discrepancies between the readings of the instrument being calibrated and the reference standard, allowing for adjustments to be made to align the instrument's output with the known accurate readings of the reference standard.

To conduct a comparative calibration, start by ensuring that the reference standard used is traceable to a national or international standard and has a higher accuracy than the instrument being calibrated. Place both instruments in stable environmental conditions and apply known inputs or quantities within the operational range of the instruments. Record the readings obtained from both the instrument being calibrated and the reference standard, then analyze the differences in the measurements. These differences help identify any errors or inaccuracies in the instrument being calibrated and guide adjustments to improve its accuracy.

This method is commonly used when calibrating instruments such as thermometers, pressure gauges, multimeters, or any device where the accuracy of measurements is critical. It's essential to follow proper procedures and ensure that the comparative calibration is performed by trained personnel using calibrated and reliable reference standards to achieve accurate calibration results.

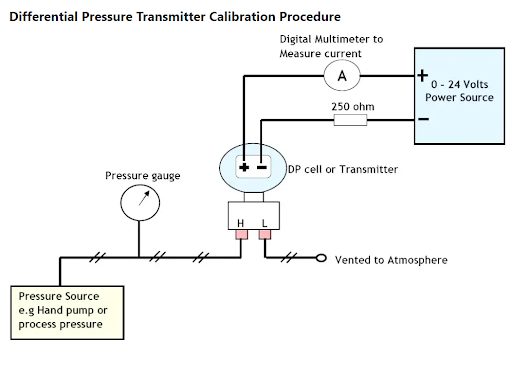

Fig 5. Differential pressure sensor calibration

How does Electrical Simulation method work for calibrating a pressure sensor?

The Electrical Simulation method is employed for calibrating sensors that generate electrical output signals in response to pressure changes, such as some types of pressure sensors that produce voltage or current as their output. For instance, in the case of a pressure sensor that generates a voltage signal corresponding to the applied pressure, electrical simulation involves emulating these voltage signals to calibrate the sensor.

Here's a general idea of how Electrical Simulation can be used for calibrating a pressure sensor:

Multi-point Calibration

Multi-point calibration involves calibrating an instrument or sensor at multiple known input points across its operational range to ensure accuracy and reliability across various measurement values. Unlike single-point calibration that adjusts at only one reference point, multi-point calibration accounts for potential nonlinearities or deviations throughout the instrument's range, enhancing its precision.

During multi-point calibration, technicians apply different known inputs or quantities at various intervals within the instrument's measurement range. For instance, a pressure sensor might be calibrated at low, medium, and high-pressure levels relevant to its application. At each point, the sensor's output is compared against the expected values or those obtained from a reference standard. Any discrepancies are noted, and adjustments are made to minimize errors and ensure the instrument provides accurate readings across the entire range. This method ensures that the instrument's response remains consistent and accurate at different measurement points, improving its reliability for diverse applications and environments.

Step by step guide for Multi-point Calibration

Here's a step-by-step guide for performing a multi-point calibration:

Important tips of Multi-point Calibration method

Here are some important tips to consider when conducting multi-point calibration:

Fig 6. Pressure gauge digital calibration

Compare Multi-point Calibration and Zero and Span Adjustment method

Here's a comparison table outlining the differences between Multi-point Calibration and Zero and Span Adjustment methods:

Table 1. Comparing Multi-point Calibration and Zero and Span Adjustment method

| Aspect | Multi-point Calibration | Zero and Span Adjustment |

| Purpose | Adjusts instrument across multiple points in its range | Sets instrument's zero and full-scale measurement points |

| Calibration Points | Multiple points across the instrument's operational range | Typically focuses on two points: zero and span |

| Accuracy Coverage | Ensures accuracy at various intervals within the range | Establishes accuracy at extremes and across the range |

| Method Complexity | More complex, requires calibration at multiple intervals | Relatively simpler, focusing on two specific points |

| Nonlinearity Consideration | Addresses nonlinearity, capturing deviations in the range | May not capture nonlinearities between two calibration points |

| Precision Adjustment | Requires adjustments at multiple points | Adjustments made specifically at zero and span points |

| Comprehensive Coverage | Ensures accuracy at various operational conditions | May not capture inaccuracies between zero and span |

| Application | Widely used for instruments requiring precise readings | Commonly used for basic calibration in linear instruments |

Both methods aim to enhance an instrument's accuracy, but they differ in their approach to calibration. Multi-point calibration ensures accuracy at various intervals within the range, addressing potential nonlinearities. On the other hand, zero and span adjustment focuses on two specific points to set the instrument's baseline and full-scale measurements, which might not capture potential inaccuracies between these points. The choice between these methods depends on the instrument type, accuracy requirements, and the extent of calibration needed.

How is the Automated Calibration System?

Automated Calibration Systems are sophisticated setups designed to streamline and automate the calibration process for various instruments and devices. These systems integrate advanced software, precision equipment, and often robotic or computer-controlled mechanisms to perform calibration tasks efficiently and with high accuracy.

One of the primary advantages of Automated Calibration Systems is their ability to reduce human error significantly. By automating calibration procedures, these systems minimize manual intervention, ensuring consistency and precision in the calibration process. They can handle large volumes of instruments and perform repetitive tasks with exceptional accuracy, saving time and resources for calibration facilities.

These systems often feature calibration software that manages calibration schedules, records data, and generates reports, enhancing traceability and compliance with standards. Automated Calibration Systems are commonly utilized in industries with stringent calibration requirements, such as aerospace, pharmaceuticals, or manufacturing, where precision and traceability are critical. Despite the initial investment and setup costs, these systems offer long-term benefits by improving efficiency, reducing downtime, and ensuring compliance with calibration standards.

Automated Calibration Systems working principle

Automated Calibration Systems operate on a combination of advanced software, precision instruments, and often robotic or computer-controlled mechanisms to carry out calibration tasks. The working principle involves several key components and steps:

Are Automated Calibration Systems reliable?

Automated Calibration Systems can be highly reliable when properly designed, implemented, and maintained. However, their reliability depends on various factors:

Fig 7. High accuracy pressure sensor calibrator

Important tips should observe about Automated Calibration Systems

Here are some crucial tips to ensure the effectiveness and reliability of Automated Calibration Systems:

What are the software used in Automated Calibration Systems?

Automated Calibration Systems utilize various types of software to manage and control the calibration process. These software applications are designed to streamline workflows, automate tasks, record data, and ensure accuracy in calibration. Here are some types of software commonly used in Automated Calibration Systems:

What are the tools used in Automated Calibration Systems?

Automated Calibration Systems incorporate various tools and equipment to automate and perform precise calibration tasks efficiently. These tools are integrated into the system to ensure accurate measurements, adjustments, and data collection. Here are some common tools used in Automated Calibration Systems:

Fig 8. To calibrate P/I sensor

Which calibration instruments are used in Automated Calibration Systems?

Automated Calibration Systems utilize various calibration instruments to perform precise and automated calibration across different types of measurement parameters. These instruments are integral components of the system, allowing for accurate adjustment, verification, and validation of other instruments or devices being calibrated. Here are some common calibration instruments used in Automated Calibration Systems:

Safety Equipment that used in Automated Calibration Systems

Safety is paramount in any calibration process, especially in Automated Calibration Systems where various instruments and equipment are involved. Here are some essential safety equipment commonly used in these systems:

Fig 9. Thermocouple calibration

How can understand the calibration results are valid?

Validating calibration results involves several steps to ensure accuracy, reliability, and compliance with established standards. Here are some key aspects to consider to understand if calibration results are valid:

How can verify the calibration result?

Verifying calibration results involves confirming the accuracy and reliability of the calibration process by using known references or methods. Here's a guide to verifying calibration results:

Conclusion

Calibration is a critical process to ensure the accuracy and reliability of measurement instruments. Whether using Automated Calibration Systems or traditional methods, obtaining valid and verified calibration results is essential for various industries, ensuring precise measurements that underpin quality, safety, and compliance.

Validating calibration results involves a meticulous approach, considering factors such as traceability, adherence to standards, reference standards' accuracy, and measurement uncertainty. Verifying calibration results includes cross-checking with known references, repeatability tests, comparison with published data, and assessing instrument performance under varied conditions.

Automated Calibration Systems offer efficiency and consistency in the calibration process, integrating advanced software, precision tools, and safety measures. However, their reliability hinges on factors like accurate equipment, robust software management, thorough training, and continuous maintenance.

Ultimately, ensuring valid calibration results involves a comprehensive approach that includes adherence to standardized procedures, regular maintenance, stringent record-keeping, and a commitment to continuous improvement. Valid and verified calibration results are the cornerstone of accurate measurements, contributing significantly to quality assurance, regulatory compliance, and reliable operations across industries.

To recap

1.What is pressure sensor calibration?

Pressure sensor calibration is the process of adjusting and verifying the accuracy of pressure measurements obtained from a pressure sensor by comparing its readings with known reference standards or values.

2.Why is pressure sensor calibration important?

Calibration ensures that pressure sensors provide accurate and reliable measurements, critical for various applications in industries like manufacturing, aerospace, automotive, and healthcare.

3.What are the common pressure sensor calibration methods?

Common pressure sensor calibration methods include deadweight testers, hydraulic or pneumatic calibrators, electrical simulation, multi-point calibration, and zero and span adjustment.

4.How does a deadweight tester work in pressure sensor calibration?

A deadweight tester uses calibrated weights to apply known pressures to a sensor. The weights create a force on a piston, generating a precise pressure that can be compared against the sensor's readings.

5.What is the purpose of zero and span adjustment in pressure sensor calibration?

Zero and span adjustment sets the baseline output (zero) and full-scale range (span) of the pressure sensor to ensure accurate readings within its operational limits.

6.What is multi-point calibration for pressure sensors?

Multi-point calibration involves calibrating pressure sensors at various known pressure levels across their operational range to ensure accuracy and reliability at different measurement points.

7.How does electrical simulation work in pressure sensor calibration?

Electrical simulation generates electrical signals that simulate pressure values, allowing calibration of pressure sensors that respond to electrical signals rather than physical pressure.

8.What is the significance of traceability in pressure sensor calibration?

Traceability ensures that calibration results can be traced back to recognized standards, providing assurance of accuracy and reliability in pressure sensor measurements.

9.Can pressure sensor calibration be performed in-house?

Yes, many organizations conduct in-house pressure sensor calibration using calibrated equipment, reference standards, and proper procedures to maintain accuracy and reduce downtime.

10.How often should pressure sensors be calibrated?

Calibration frequency depends on factors like usage, environmental conditions, and industry standards. Typically, pressure sensors are calibrated annually, but critical applications may require more frequent calibration.

References

https://us.flukecal.com/blog/how-calibrate-pressure-transmitter-on-bench

https://instrumentationtools.com/differential-pressure-transmitter-calibration-procedure/

https://www.fluke.com/en-us/learn/blog/calibration/pressure-transducer-calibration

https://www.transmittershop.com/blog/important-calibration-tips-for-pressure-sensors/

https://tameson.com/pages/pressure-gauge-calibration

https://www.transmittershop.com/Assets/images/FCUIEagle/1/Blog_200X112/Blog_15.jpg

https://control.com/technical-articles/what-is-instrument-calibration-and-why-is-it-needed/

Recent Posts

-

Booster Pump Troubleshooting and Maintenance: How to Fix and Prevent Common Issues

1. Introduction Imagine turning on your faucet only to be greeted with a weak trickle of water when …22nd Apr 2025 -

Energy-Efficient Booster Pumps: Selection and Tips for Maximizing Performance

1. Introduction Imagine never having to deal with fluctuating water pressure, noisy pumps, or skyroc …19th Apr 2025 -

Booster Pumps for Sustainable Water Systems: Irrigation and Rainwater Harvesting Solutions

1. Introduction Water scarcity is no longer a distant threat—it’s a reality affecti …16th Apr 2025